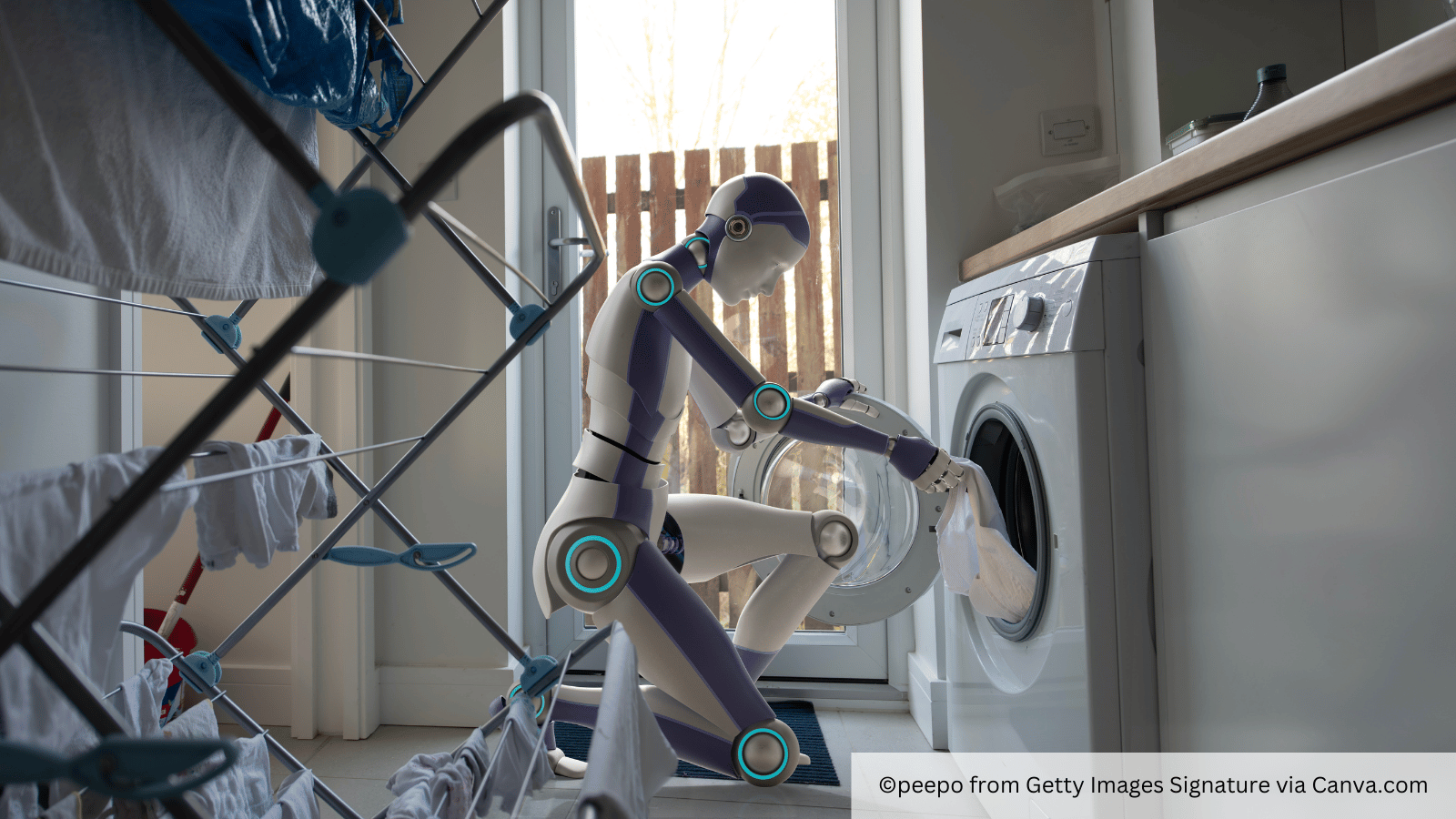

“You know what the biggest problem with pushing all-things-AI is? Wrong direction.

I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.”

Last March, author Joanna Maciejewska posted the above on X, and quickly found herself going viral. Her post (can we call them Tweets anymore?) bemoaned the fact that the tech had, perhaps, gone too far.

She went on to clarify; “…This post isn't about wanting an actual laundry robot. It's about wishing that AI focused on taking away those tasks we hate (doing taxes, anyone?) and don't enjoy instead of trying to take away what we love to do and what makes us human.”

I think Joanna has a point.

When AI first entered the scene, in the same way that all previous tech had followed it was presented as a means of improving users’ lives – to make managing work easier, virtual assistants which could cut down the time spent doing manual, menial tasks so that our human brains could be better focused on the more creative stuff.

But now we’ve reached the age where the creativity is increasingly falling under the remit and capabilities of the machines.

AI and Authenticity

Take the arts for example. Illustrators are facing the reality that their job opportunities are becoming increasingly limited by the growing capabilities for AI to produce a custom-made image or illustration in a fraction of the time. And it’s not just the illustrators who are suffering.

On threads, one author posted in panic when the company she’d contracted to provide the cover art for her new book sent back digitally-made images. Her worry? The author noted in response to her post’s comments that not only had she not approved the use of AI generated images for the job, but there was likely a human illustrator who could have enjoyed and benefitted from the work far more than a machine.

There’s also the issue of authenticity to consider. AI images are regarded by many as disingenuous. People can feel duped when spotting an art form is AI rather than human-made – especially when it isn’t made obviously clear to them that what they’re looking at is computer generated – and as a result decide that the image is of lesser quality or worth.

This has repercussions. There have been a number of controversies concerning publishing houses which have chosen to use AI generated images on book covers in the last 12 months. In response the Society of Authors (SoA) published a paper in which it noted the “potential benefits of machine learning” but warned that there was significant risk with AI that could not be ignored. Safeguards, the authors said, needed to be put in place swiftly to ensure that the creative industries “will continue to thrive”.

Trust too is put to the test. As we watched social media contended with whether images of Swifties were really wearing pro-Trump t-shirts, and whether Justin Trudeau was really recommending politically skewed books, it’s only compounded the feeling that anything involving AI should be treated with a healthy amount of skepticism.

So why would media outlets want to embrace AI in their writing?

Setting Boundaries

At the end of last year I attended the Newsrewired conference in London – an event which gathers together editors, journalists, publishers and other communications professionals, as well as academics to discuss and explore the latest trends in digital publishing.

Unsurprisingly, a key focus of this event was the growing influence and use of AI in writing and media production.

The general consensus from this event? We simply can’t avoid AI. "Whether we like it or not, we have to acknowledge AI or ignore it at our own peril,” Nina dos Santos, a former CNN anchor and freelance journalist commented during the closing keynote talk.

Now that AI has the capability to write, people will use it – and it’s not just small individually-ran blogs looking to boost growth and engagement by increasing their posting capacity but, increasingly, the under-budgeted and increasingly pressured news outlets and magazines trying to keep pace with the news cycle.

But is it right to use them? Despite their cleverness and ability to do the heavy lifting when it comes to content generation, the general consensus here seems to be “no”.

Tools like ChatGPT or Claude can write an article on seemingly any topic and in any style, when given the right prompts – and the text they produce can sound convincingly human. But in doing so several concerns arise…

Where has that information been pulled from? Can those sources can be trusted (would a trained, accredited journalist have approached those same sources for information for example)? Can those sources be credited for their contributions? In short, AI lacks transparency and cannot take the due care and considerations a human writer would. And sometimes, it’s content can be utterly false.

In fact, in asking Claude to come up with an article which uses academic research to back up its narrative, it warns; “… I am Anthropic's AI assistant and may hallucinate citations, so please verify all references independently.”

Those choosing to use AI, with little attention on fact checking not only risk their work including completely fabricated information, but where accurate details have been included, risk falling foul of copyright laws.

In December 2023 the New York Times sought to sue ChatGPT founders Open AI citing these very concerns – claiming that the tech had used millions of articles from their archives without their consent, sometimes republishing text verbatim. Another key gripe is that this allowed readers who were not subscribed to NYT content to access their material for free.

AI and Trust

Currently, AI cannot effectively be used independent of human intellect and reasoning. But the tech is still learning, which brings a further concern – how much should we teach it?

More recently in the UK a collective of writers, photographers, musicians and other artistic professionals rejected a proposal by the government to create a copyright exemption in order to help train AI algorithms like Open AI and Meta to be more effective. The issue, they say, is that training such tech not only leads to their work being duplicated unfairly, but puts human opportunity and value at stake the more capable the AI becomes.

Instead, like the authors and illustrators have warned, there needs to be greater protections put in place.

So what’s the way forward here? Unfortunately, there is no clear solution.

The tech exists and will be used by those inclined to do so. Instead the discussion must focus on establishing “how” AI should be used to best effect, and agreeing the parameters for doing so in order to protect creatives, their brands and their audiences.

When it comes to media, we know that AI generated articles cannot be trusted in their accuracy, or reliability. The same can be said for using AI in media relations too.

The core component of a PR or marcomms role is establishing trust – whether that’s between PR agencies and their clients, institutions and their audiences or, of course, the media outlets they aim to engage with.

Whilst creating and enacting an effective PR and Marcomms campaign is no doubt a time-intensive job, using AI to try and lighten the load by drafting content, creating pitches or press releases, or even in the production of op-ed articles, risks bringing that trust very much into question.

AI has it’s uses – but writing is not it.

The Future

A fellow delegate at the Newsrewired conference summed up the issue in a LinkedIn post reflecting on the event. Media relations demands an ability to deliver and respond to current affairs swiftly, and in ever-increasing volumes. But by relying on AI to take the burden of this from us we’re missing out on what matters – taking to people, finding out what they need and then delivering it.

Trust aside, by engaging AI you risk losing the very thing that makes your individual message and efforts valuable.

“Doing the slow work of serving a community and building audience loyalty. Delivering a public service worth paying for and trying to make enough money to keep delivering that public service. You know, journalism,” she notes.

The same can be said of any outlet or institution hoping to engage with an audience – the humanity of communications must remain the central focus.

So what can we use AI for?

AI can be an effective research tool – provided humans then do the job of checking the information they share.

For example, by using AI learn about your audience, analysing online conversations and social media activity you can better understand their priorities and needs and complaints and position yourself to better address them.

Going further, AI can aid time-pressed workers in conducting wide online scans to gather ideas, and information to help you plan and pitch your own human-made content accordingly.

It can also break down complex and emerging topics into easily-understood summaries, giving you a swift education and pointing you to additional sources that might be worth your time and interest so that you can respond accordingly – again in your own human way.

So, effectively, getting the AI to do the “grunt work” – the equivalent of the laundry and the dishes to that humans can apply their skills to the creative magic.

But in using all of that information to enact a media relations strategy? You’re best to leave it to the very human experts.

And for those institutions engaging with agencies, whilst boasting about AI capability is certainly impressive, it pays to also understand more about how they choose to work with the technology and where they too choose to draw the line.

Regardless of where you stand, those working in or in relation to the media, whatever their role, need to be better aware of the capabilities and possibilities of what AI can do. Forearmed is forewarned.

Kerry is the Strategic Communications and Editorial Lead at BlueSky Education and a former BBC journalist. Recognised in the graduate management education arena as a leading authority on communications for the industry, Kerry has more than a decade of experience in the media and public relations.