As a leading PR and marcomms specialist, it’s fair to say that we read and review a lot of content. That’s understating it, and our thirst for knowledge means we are encountering an ever-increasing volume of copy on business websites that is clearly being generated by GenAI platforms. The likes of ChatGPT, Claude and CoPilot are being used to fill web copy, produce blogs and even write ‘meet the team’ bios and other supposedly ‘personal’ content.

Aside from this approach being a surefire way of eroding the human elements of the brand that you have likely invested significant time into crafting, a key issue with all GenAI platforms is their tendency to make mistakes.

Many readers may have already experienced this for themselves, but if we look at the recruitment remit in particular, there are a wealth of ‘AI-gone-wrong’ stories. AI interviewing platforms, for example, have been criticised for making prejudiced decisions based on worker age, ethnic background and for talking over applicants, amongst many other examples.

AI copywriting errors

Within copywriting, usually it’s the Random Capitalisation Of Words, or the emojis, or numbered lists that give the game away, but often it’s the information that GenAI tools are providing that is simply wrong.

This is understandable; these tools are in their infancy and their ‘intelligence’ is purely based on other publicly available information that it was trained with. Just as you wouldn’t lift something from Google verbatim, GenAI content must also be reviewed, edited and amended for it to offer any tangible value. But it does beg the question of why so many organisations are outsourcing their content to these tools without first checking the results?

We’ve identified several examples of AI copywriting fails, just a selection of which we’ve outlined below:

-

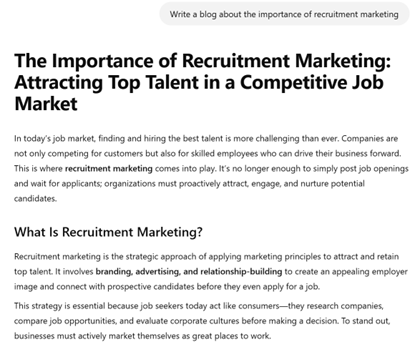

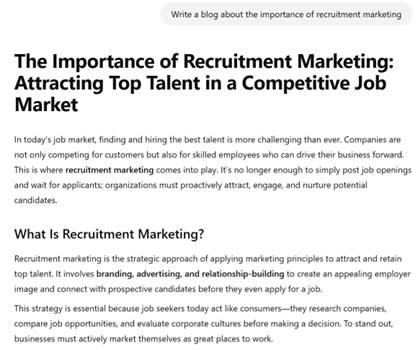

Same inputs? Same content

We have seen multiple examples where agencies – clearly in a hurry to produce any content whatsoever – have fed GenAI tools the same prompts as their competitors. And guess what? They all received the same content. We won’t give the game away and name the organisations, but featuring blogs or articles that are word-for-word identical to those featured on a rival firm’s website doesn’t suggest that you are a caring, or diligent organisation to potential clients or candidates.

As you can see from the example below, putting in the same prompts will produce the same copy (for reference, these prompts were inputted into ChatGPT from two separate laptops in two different locations):

Spot the differences (clue – there aren’t any)

-

Leaving the prompts in

Again, there are multiple examples we could show, but author KC Crowne was found to have used GenAI to write her book, ‘Dark Obsession’, after she left the prompts produced by GenAI in the copy. This might sound ridiculous, but numerous organisations couldn’t make it any clearer that they’ve used AI, rather than putting the work in themselves. If you are going to leverage ChatGPT or CoPilot to produce your content, at least review it before publishing.

Yeah, using AI to write your book is embarrassing, but not nearly as embarrassing as leaving the ChatGPT prompt response in your published copy

— Ryan Ringdahl (@ryanringdahl.com) 17 January 2025 at 00:33

[image or embed]

-

Lack of context

In a particularly embarrassing example, and from an organisation that should know better, Microsoft Travel had to pull an AI-generated article that it published which advised tourists to visit a ‘beautiful’ Ottawa-based food bank. While the organisation blamed this on human error, rather than AI, the rest of the article heavily suggested that there was no human involvement in its development. The feature, ‘Headed to Ottawa? Here’s what you shouldn’t miss’ was riddled with copy errors, used photos of an entirely different location, and listed the food bank as the third most popular destination for diners in the state, as well as ending with the bizarre recommendation: "Life is already difficult enough. Consider going into it on an empty stomach." This appears to be based on the actual slogan on the food bank website, “Life is challenging enough, imagine facing it on an empty stomach," highlighting the risks facing those who rely on AI to produce content that will ultimately be read by potential clients, candidates and other stakeholders.

Outside of these high-profile and embarrassing cases, it’s often the production of more nuanced content and detailed information where GenAI simply can’t keep pace with humans. This is likely to change in the coming years, and breakthroughs in quantum computing suggest that platforms will be truly intelligent within the next decade, but for now, it’s worth leveraging human insights, rather than GenAI-produced ones if you want to add any value to your audience.

However, there is another issue with GenAI, outside of the mistakes it regularly makes:

AI and SEO

Around 71% of people now use AI tools for searches, where they previously would have leaned on Google, Bing, Yahoo, or for those of a certain vintage, even Ask Jeeves.

As we outlined in a recent blog, GenAI is changing the game when it comes to SEO. Businesses want to rank in organic search findings, but they also want to be included in AI results because of the growing number of people using these platforms. However, the likes of ChatGPT won’t simply regurgitate content it’s already produced and instead will seek out human-developed sources. This means if you’re leveraging AI to bolster your SEO and improve your search ranking, you’re doing more harm than good. These platforms will prioritise human insights because they know they are more accurate than their own content. You can find out more in our recent article, but any marketing professional is advised to seek guidance before potentially flushing their SEO work down the toilet.

Ultimately, AI is technology, and it's by no means foolproof. The willingness of organisations to erode the human aspects of their brand, after spending years developing and building them up, is baffling; all for the sake of saving some time (which, let’s face it, will be lost when it comes to trying to rebuild your brand reputation once AI inevitably fails). AI can churn out information all day long, but it can't (yet) replicate genuine human experience and emotion. Content that tells stories, shares personal perspectives and makes you feel something is proving more resilient to AI disruption.

This is a particular problem for those that do leverage AI, because emotion is incredibly important if you want to sell. Harvard Business School professor, Gerald Zaltman says that 95% of our purchase decision-making takes place in the subconscious mind, which means it’s more emotional than logical.

If you do insist on using GenAI, at least use it with care. The dehumanisation of brands being seen across the business world will cause significant and lasting damage and those that continue to put the human effort into their content strategy will likely be those that thrive.

Over the last 15 years Vickie has worked with many talent acquisition teams and recruitment agencies to raise their profile across their specialist areas.

Vickie is an expert in demonstrating her clients’ thought leadership and showing them how to use it. She can turn a short conversation with you into gold.

Read more of our blogs here

Find out more about our content and copy services

Get in touch with our team